Co-authored by Professor Philip Howard, Director of the Oxford Internet Institute (OII), and Samantha Bradshaw, Researcher at the OII, this Global investigation of disinformation is the only regular inventory of its kind to look at the use of algorithms, automation, and big data by politicians to shape public life. The 2019 report has shown a dramatic increase in the use of Computational propaganda just within the last 2 years, with China becoming a global player in this field for the first time.

Who orders and who executes computational propaganda?

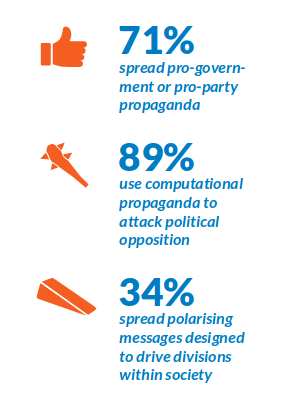

In all 70 countries where computation propaganda was detected, either the government or political parties hire cyber troops tasked with manipulating public opinion online.

Government agencies using computational propaganda were detected in 44 countries, while political parties or politicians running for office with the help of computational propaganda were found in 45 countries. These numbers include using advertising to target voters with manipulated media, such as in India, or instances of illegal micro-targeting such as the use of the firm Cambridge Analytica in the UK Brexit referendum.

Cyber troops use four types of fake accounts to spread computational propaganda: bot, human, cyborg, and stolen, but the first two are the most common. Bots are highly automated accounts designed to mimic human behavior online. A small team can manage thousands of such accounts to achieve a required scale of presence in social media. Human-run accounts are even more common for they can engage in conversations by posting comments or tweets, or accomplish effective private messaging.

Human-operated accounts were found in 60 out of the 70 countries. They’re particularly effective in countries with a cheap labor force, such as China or Russia, where thousands of citizens, in particular students, are hired to manage accounts.

Yet more, in countries like Vietnam or Tajikistan, state actors encourage cyber troops to use their real accounts rather than to create fake ones. Dissemination of pro-government propaganda, trolling political dissidents, or mass-report content becomes more effective through real accounts as social media companies become more aggressive in taking down accounts associated with cyber troop activity. The co-option of real accounts is predicted to become a more prominent strategy.

Cyborg accounts combine automation with human action while stolen high profile accounts are strategically used by cyber troops to censor freedom of speech through revoking access to the account by its rightful owner. In authoritarian regimes, computational propaganda has become a tool of information control that is strategically used in combination with surveillance, censorship, and threats of violence.

A business of computational propaganda

Computational propaganda remains a big business. The researchers found large amounts of money being spent on "PR" or strategic communication firms to work on campaigns in countries such as the Philippines, Guatemala, and Syria. The finances involved can reach multi-million-dollar contracts with global companies like Cambridge Analytica.

Some teams are comprised of a handful of people who manage hundreds of fake accounts. In other countries – like China, Vietnam, or Venezuela – dozens of thousands of people are hired by the state to actively shape opinions and police speech online.

Thus, the skills of computational propaganda attain value and are being disseminated worldwide. For example, during the investigations into cyber troop activity in Myanmar, evidence emerged that military officials were trained by Russian operatives on how to use social media. Similarly, cyber troops in Sri Lanka received formal training in India. Leaked emails also showed evidence of the Information Network Agency in Ethiopia sending staff members to receive formal training in China.

Main strategies of computational propaganda

1. The creation of disinformation or manipulated media, mass-reporting of content or accounts was most popular in 52 out of the 70 countries. Cyber troops actively created content such as memes, videos, fake news websites or manipulated media in order to mislead users.

2. Data-driven instruments are the second important group of strategies. The content created by cyber troops is targeted at specific communities or segments of users. This includes targeted advertisement and micro-targeting to maximize impact.

3. Trolling or harassment was used in 47 countries. Especially in authoritarian regimes, cyber troops censor speech and expression through the mass-reporting of content or accounts. Posts by activists, political dissidents, or journalists often get reported by a coordinated network of cyber troop accounts in order to manipulate the automated systems that social media companies use to take down inappropriate content.

Who is the leader in computational propaganda

It’s hard to name one country, although there is plenty of where the use of computational propaganda is especially wide-spread. Among the most dangerous are authoritarian regimes that suppress not only activism on the internet but any possible alternative of expression or protest.

Researchers distinguish minimal, low, medium and high cyber troop capacity, depending on the team-size and budget that a country spends for propaganda goals.

High cyber troop capacity involves large numbers of staff and large budgetary expenditures on psychological operations or information warfare. There might also be significant funds spent on research and development, as well as evidence of a multitude of techniques being used. These teams do not only operate during elections but involve full-time staff dedicated to shaping and controlling the information space. The table below lists all countries with the most developed computational propaganda.

The vast majority of countries have middle cyber troop capacity which is still considerably high.

Another important criterion of the capacity of the country’s cyber troop is its attempt for foreign or global influence. Facebook and Twitter – that have begun publishing limited information about influence operations on their platforms – have taken action against cyber troops engaged in foreign influence operations in seven countries: China, India, Iran, Pakistan, Russia, Saudi Arabia, and Venezuela.

Although this measure does not capture the extent to which foreign influence operations are taking place, we can confidently begin to build a picture of this highly secretive phenomenon.

Computational propaganda has become a normal part of the digital public sphere. These techniques will also continue to evolve as new technologies – including Artificial Intelligence, Virtual Reality, or the Internet of Things – are poised to fundamentally reshape society and politics. But since computational propaganda is a symptom of long-standing challenges to democracy, it is important that solutions take into consideration the need for access to high-quality information and an ability for citizens to come together to debate, discuss, deliberate, empathize, and make concessions. Are social media platforms really creating a space for public deliberation and democracy? Or are they amplifying content that keeps citizens addicted, disinformed, and angry?

Read more:

- Moscow has long sought to destroy Slavic languages other than Russian, Zolotaryev says

- The Kremlin on global warming: Connecting the dots, disconnecting the facts

- How Russian propaganda uses scorched-earth tactics

- Portnikov: With Putin, Zelenskyy can speak freely

- Punishment or well-designed institutions: what will eliminate corruption in Ukraine?